Sensitivity Measurement Definition:

The Sensitivity Measurement Definition of a radio receiver is its ability to amplify weak signals. It is often defined in terms of the voltage that must be applied to the receiver input terminals to give a standard output power, measured at the output terminals. For AM broadcast receivers, many of the relevant quantities have been standardised. Hence 30% modulation by a 400 Hz sine wave is used and the signal is applied to the receiver through a standard coupling network, known as a dummy antenna.

The standard output is 50 mW; for all types of receivers the loud speaker is replaced by a load resistance of equal value.

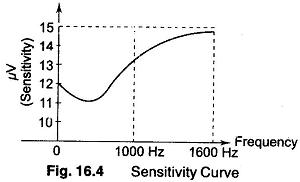

Sensitivity is often expressed in μ volts or in decibles below 1 V, and measured at three points along the tuning range when a production receiver is lined up. It can be seen from the sensitivity curve in Fig. 16.4, that sensitivity varies over the tuning band. At 1000 Hz, this particular receiver has a sensitivity of 12.7 μ V or — 98 db V (db below 1 V).

The most important factors for determining the sensitivity of a superheterodyne receiver are the gains of the IF and RF amplifiers.